One of the best practices around source-control is the idea of checking in code frequently. “Frequently” is, of course, a relative term. Some take this to mean checking in code within a matter of hours; others, days. Some suggest check-ins should occur every time a new feature is added. Then the question becomes: What constitutes a feature? Is a method of a class a “feature”? Is a class a “feature”? Is a feature based on a user story? A Use Case? A bulleted item in a requirements document?

For me, I’m prescribe to the practice of checking in per feature. And, by feature, I mean a Use Case (or a single flow of a Use Case), or a backlog requirement. Of course, some features take more time to implement than others. But this is ok. Sometimes, I’ll check in within a couple hours. Other times, I might check in after a couple days. Rarely, however, do I leave files checked out for more than 2-3 days (if that gives you an idea how “large” I spec these features).

One of the many benefits of checking in frequently is that you allow the ability to rollback in-progress code changes that are not panning out. For some of you, this may be a completely foreign idea. “Delete my code?! Are you loco?? This code was spawned from the magnificence that is my brain. It is precious.”

If that was your reaction,… well, if that was really your reaction, then you should probably stop reading. Wait, don’t stop reading. I need the subscribers… For those of you that had a bit more subtle reaction, consider this anecdote:

This morning, I was coding up a new feature for an active development project. All previous code had been checked in before starting this new feature, so I was starting from a stable build. Starting out, I was trying to figure out the best way to implement this new feature. In some cases, some up-front design and modeling would have been useful – but, in this case, where I was working with a few unfamiliar .NET classes, it made more sense to just try out a few different approaches programmatically and get a feel for which worked best.

In the span of about 90 minutes, I had implemented – and subsequently rolled back – five code changes before finding an approach that finally worked.

Some of the reasons for rolling back the first several changes included:

- The implementation was not going to work

- The implementation would have created more maintenance overhead than I wanted

- The scope of the implementation became much better than what I wanted to bite off

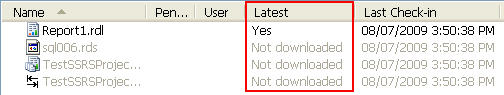

Also while making these development changes, I noticed Visual Studio modifying a number of auto-generated files that I don’t normally touch (including project, .settings and .resx files). It would have been very difficult, if not impossible, to go through these files manually and find all the changes that were made. But because I was rolling back all of the files via TFS, there was significantly less risk of introducing unstable code (or accidentally leaving leftover code) in a later build. It was also considerably faster than manually traversing the code for delinquent code.

If you’re not accustomed to rolling back code in your development cycle, it’s feels kind of like this:

- The first time you intentionally rollback (read: DELETE) your code changes, it’s kind of scary!

- The second time, it’s a little scary, a little exciting, maybe even a little deviant-like.

- Subsequent times, it’s empowering. And you wonder why you weren’t doing it sooner.

So if you are (or know someone who is) in the camp where code check-ins are almost an afterthought, consider this example and think about the value that frequent check-ins can add to the development cycle.

So if you are (or know someone who is) in the camp where code check-ins are almost an afterthought, consider this example and think about the value that frequent check-ins can add to the development cycle.